Utter Gibberish.

Often when people have to deliver bad or awkward news, they say something along the lines of “I don’t know how to tell you this, so I’m just going to say it.”

In the following instance, however, I literally don’t know how to tell you this. So I’ll just say it: BlaBlaCar has acquired Klaxit.

Dont’ take my word for it. Here’s the headline in black and white, and I’m just sorry you had to find out this way.

I could use this as an example of how the world is going to hell - how these days, BlaBlaCars can acquire Klaxits at the drop of a hat - but I’m not going to do that because, like most of you, I have no fucking idea what any of it means.

A cursory glance (I’ll investigate the matter more thoroughly as soon as I’m done with every other task I could possibly accomplish, ever) tells me that this means some bullshit ride sharing app has taken over some other bullshit ride sharing app, which would actually be a more instructive headline, but that’s all.

I appreciate that I’m entering the territory of a man in his late thirties no longer understanding technology, but I don’t think that’s the case, here. It’s not a matter of technology. It’s a matter of my formerly functional grasp of language suddenly being rendered useless by tech startups.

A quick look through the available apps on my TV tells me that I could download and watch Twitch, Plex, Mubi, Pop, Rad, Crunchyroll, Dazn, and Yupp. If you’d asked me to guess, I’d have said three of those were washing powders and at least one was probably a typo, but no, they’re all real.

Not that you’d know. If you told me you already heard about BlaBlaCar taking over Klaxit in the pages of Flonk magazine - a magazine for jaggitting your blimbles - I would have no idea if you were serious.

Which gives me an idea.

For a long time, tedious silicone valley types have told us that the internet allows for endless innovation and provides a means for anyone to succeed with their startup company. It’s demonstrable horseshit - it stills takes money to make money, and most “new” ideas are just inept rip-offs of older ones in the exact manner of BlaBlaCar and Klaxit basically doing the same thing that Lyft and Uber already did - but there is still a chance to make money based off of the idea that you have an idea. Nobody in Silicon Valley really knows what they’re doing, so they just throw money at anything that seems like it might be promising. It’s like betting on horses, if you had never read a racing form, or seen a horse, and had only a hazy grasp of the concept of running in a circle.

This means that I’m pretty sure I could start going to tech industry events and, as long as I can come up with enough words that sound like a Teletubby having a stroke, someone will give me money. I won’t even need to get changed, as the uniform for genius tech gurus is apparently a stained t-shirt and the sort of jeans you’d wear if you were washing your car, which is how I dress anyway.

I’m going to tell people that I work for Glerm, or Spoot, and if they want more detail than that I’m going to say it’s a vertically integrated AI company with blockchain-encoded algorithms and next-gen multimedia applications. If that’s not enough to get them to invest I’ll just tell them that if they have any questions they can check out my channel on Curdl, or else hit me up on Plork.

I’m not actually going to do any of this, of course, because I have no idea how to get into a tech industry event. Probably you have to arrive in a Klaxit, and that just got a lot harder.

For All You Know, A Computer Wrote This.

As long as I’m on the subject of tech and nonsense, the technological singularity may soon be upon us.

This is good news for those of us who are pretty sure that we’re all doomed anyway but who are sick and tired with all of the preamble. The waiting, as Tom Petty told us, is the hardest part. If civilisation is going to collapse through climate change, unsustainable financial systems, new pandemics or antibiotic resistant bacteria I’d rather get it over with instead of having to sit around through this incremental apocalypse. Sometimes it feels as though the downfall of humanity is being directed by Sergio Leone.

Now, we might be looking down the barrel of a Terminator-style apocalypse a lot quicker than anticipated.

The bad news for the “get it over with” crowd is that we’re probably not going to see that outcome, but it’s worth looking at why anyone might think that.

Briefly, a company called OpenAI launched their ChatGPT software about six months back, and it proved startlingly good at things like writing essays or answering complex questions. This comes on the heels of AI imaging software which has become more and more powerful and can now generate almost perfect facsimilies of human beings. AI imaging can give you a photo of a person who doesn’t exist in a room that isn’t real, and ChatGPT could write that non-person’s diary.

This month, ChatGPT launched a new, updated version that is a thousand times more complex than the previous iteration, and Google’s parent company, Alphabet, have also launched an interactive A.I. known as Bard.

Incidentally, those who are sick and tired of every Google result being an ad these days might want to try Bing. It used to be famously terrible, but the new Bing runs on (you guessed it) an A.I. algorithm and is actually pretty good.

So. Artificial Intelligence is getting good enough that it’s making people nervous. One software engineer even became convinced that the A.I. he worked with was “alive” and had something that should be considered a soul, which is mind boggling, Asimov-level stuff until you learn that the engineer in question, Blake Lemoine, looked like this:

He refers to himself as a Christian Mystic and transcripts of his conversations with the A.I. leave little doubt that he was just trying to fuck it. You already knew both of those things from looking at the picture, but I typed them out in case you’re having this read to you.

Are we really about to see the Technological Singularity? The point where a computer actually becomes human-level intelligent? A sentient, intelligent computer would be able to examine its own code and improve it, creating a smarter version of itself, which would then be able to do the same thing to its own code, until within a vanishingly short space of time we have computers that are far, far smarter than we are. The Big Bang saw an entire universe explode into being from a single tiny point known as a singularity. The technological singularity would see the same thing happen with A.I. We’d be dealing with computers that were Godlike, from our idiot, mortal perspective.

But honestly, probably not yet.

The answer to “how close is the technological singularity?” has to be couched in language like “probably” because of two competing problems. The first is called the Chinese Room problem.

Alan Turing, the pioneering computer genius and codebreaker, famously came up with the Turing test - in a written conversation, can a computer fool you into thinking it’s a human being?

We passed that point a while ago. Set up a profile on a dating app - or even open an email account somewhere - and spam-bots will start conversations with you that could believably be typed and sent by real people, at least for the first few exchanges. And these, bear in mind, are the low end of the technology. ChatGPT absolutely responds in a believably human way, so we can probably cross the Turing test off the list.

The Chinese Room problem is the next step. Imagine that you’re in a room with a Chinese to English dictionary, and someone slides a note under the door in Chinese.

You don’t speak Chinese - nobody does, because it’s not one language but several, you insensitive racist - but you’re able to decipher the note, formulate the reply in English and translate it back, write that reply and slide it back out.

To the person outside of the room, it’s logical to assume that you speak the language, but you don’t. This is essentially what we’re seeing with modern A.I. - it’s not thinking, or at least not in any way we would recognise as “real” thought, but to an outside observer, what it’s doing looks like thinking. You can ask it a question and it can formulate a reply that makes sense and is usually correct.

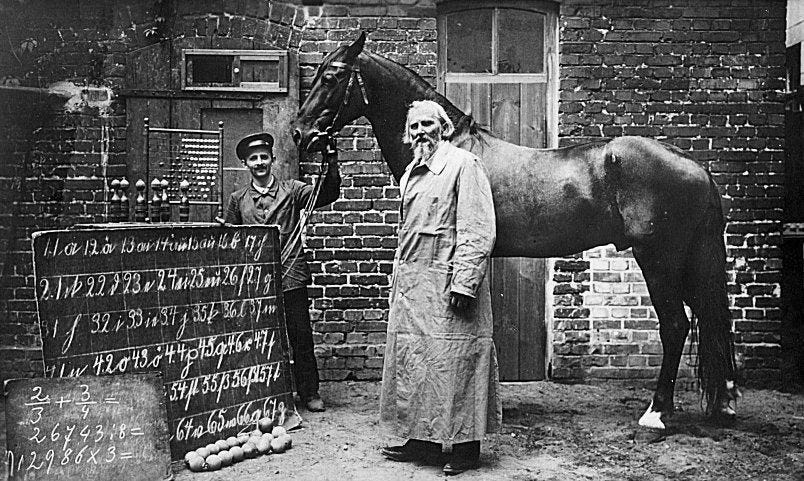

This is related to the Clever Hans effect, by the way. In the early 20th century a German man named Wilhelm Von Osten decided to teach his horse to do sums.

He seemingly succeeded in teaching Hans the horse to do basic arithmetic, and toured for a while with his equine prodigy, but careful investigation eventually showed that no, the horse couldn’t do calculus.

I know, this is the most shocking thing you’ve heard since BlaBlaCar bought Klaxit, but it’s true. The horse was merely picking up on cues from his human trainer - essentially, the horse understood that the noise the human made when he said “What’s two plus one?” meant that it was time to stamp its foot three times. It’s the same as my dog understanding that she has to put her butt on the floor when I say “sit.” She probably doesn’t have much of a Platonic ideal of the concept of “sitting,” but she knows that certain noises I make are designed to generate a certain response from her, and maybe then she gets a treat.

This, as mentioned, is related to the Chinese Room problem and what we’re seeing with A.I.; ChatGPT and Google’s Bard and the others probably don’t understand your question in any deep way. They’re just generating responses based off of their coding.

Anyone still paying attention will have caught the “probably” back there. This is because of yet another concept we run into with this stuff - the so called Black Box problem.

Essentially, OpenAI and Google and other companies are (for the moment) run and staffed by humans, and humans are lazy. Every single technological leap in our history has been in aid of making our own lives easier, and A.I. is no different. In these instances, computer programmers worked out a while back that they didn’t need to teach an A.I. everything - they just needed to teach it how to learn for itself and then put their feet up. You don’t need to tell someone every story if you just teach them to read and give them a library card.

Need a legal document drawn up? ChatGPT has read thousands of them, so now it knows how to write one for you. Need your homework done in a hurry? ChatGPT has read endless history books and Wikipedia articles and it can write the essay for you if you give it the right parameters.

Unfortunately, what this means is that we don’t exactly know what these A.I.s have taught themselves or how they went about it. Their “minds”, for want of a better word, are sealed units that we can’t peek inside, hence the term “black box.” To return to the earlier analogy, if you turn someone loose with a library card you don’t know what they’re going to end up reading.

Letting computers teach themselves to become smarter with no clear idea how they’re doing it might sound like cloning dinosaurs for a theme park and then also letting them run the park, but I never said this was a wise idea. I’m just explaining why terms like “probably” keep getting thrown around when people say that the technological singularity is probably still a long way off.

To sum up, modern A.I.s probably aren’t thinking for themselves. They’re not really intelligent in the way that a human is (a human brain has about a hundred billion neurons and is still vastly more complex than even the most cutting edge supercomputer) and they’re very unlikely to reach that point any time soon. The best guess that can be made is that programs like ChatGPT are showing what experts are calling the first sparks of something like intelligence, but that’s all.

On the other hand, I’d direct everyone’s attention to an article from the New York Times in 1903, in which it was confidently stated that “to build a flying machine would require the combined and continuous efforts of mathematicians and mechanics for 1-10 million years."

Orville Wright’s diary from the same day read: “We began construction today.”